Blog

Administration at Scale: The Emerging Role of AI in Modern Claims Management

12/16/2025 12:00:00 AM

Jennifer Keough: Co-Founder and CEO, JND Legal Administration

Neil Zola: Co-Founder and Executive Managing Director, JND Legal Administration

Ben Sexton, SVP Innovation and Strategy, JND Legal Administration

As originally published by The National Law Review

The settlement amount may grab the headline, but for class members, the real impact lies in the administration. Was the notice campaign successful? Was it easy to file a claim? Was the distribution of settlement funds handled smoothly?

As class action settlements grow in complexity and scale—and as fraud becomes more sophisticated—the traditional infrastructure of claims administration, built on manual processes and human review, is increasingly under strain.

Artificial intelligence (AI), once a buzzword in legal tech circles, has become a practical necessity. When used correctly, AI doesn’t replace thoughtful adjudication or administrative oversight—it strengthens them. It helps administrators design more effective notice programs, review documentation at scale, detect fraud, and ensure fairness in distribution.

Still, you might ask: isn’t AI just the next “blockchain”? A flash-in-the-pan marketing term, hyped in pitch decks and investor updates, destined to fade outside a few niche applications?

Maybe for some. But in claims administration, and across the legal industry more broadly, AI isn’t just a future bet. It’s already reshaping how work gets done.

Let’s paint a picture of how AI is transforming claims administration. While what follows is a hypothetical, with invented companies and an invented court case, the technology is real and already in use today.

The Case: Jane Doe v. Fictional Mobile Company

In this case, the plaintiffs alleged that Fictional Mobile Company (FMC) charged subscribers for its “Mobile Protect” device protection plan unnecessarily, particularly to users of iPhone models that were already under warranty and unlikely to need third-party coverage. The settlement defines the class as all Fictional customers located in the United States who paid for Mobile Protect while using an iPhone 12, 13, or 14 between October 1, 2020 and April 31, 2025.

Because FMC has a twelve-month retention policy for customer data, the company isn't able to provide granular information about former customers, so claimants are required to provide proof with their claim. To qualify for relief, class members must submit a Fictional bill or account statement showing the service charge and their eligible device.

The challenges for the claims administrator are clear: millions of potential class members, a narrow class definition, and documentation requirements that demand more than a simple name-and-address match.

Step 1: Outreach to the Class

In the hypothetical Jane Doe v. Fictional Mobile Company, the class has been certified under Rule 23(b)(3), and with it comes the obligation to provide notice that is “the best practicable” under the circumstances. The notice plan—negotiated by the parties and approved by the court—sets the framework: digital channels, defined reach goals, and approved summary and long-form notice content.

But the work doesn’t stop there.

Within the bounds of the certified plan, you bring in AI to sharpen execution. You’re not just looking for “iPhone users.” You’re using AI to uncover subtle behavioral signals that correlate with likely class membership. The system highlights patterns that you wouldn’t have predicted, like high conversion rates among users who recently searched for “how to cancel Fictional Mobile phone insurance” or posted in forums about unexplained FMC charges.

AI-based synthetic audiences help you target those segments, tailor the messaging, and create notice imagery that effectively drives engagement. For example: “Not sure if you had Mobile Protect? Check your old Fictional Mobile bill—you could be eligible for up to $100.” That headline, paired with an AI-generated bill image, consistently outperforms more direct legal phrasing and alternative creative options across test groups—especially among former customers who have since moved to other carriers.

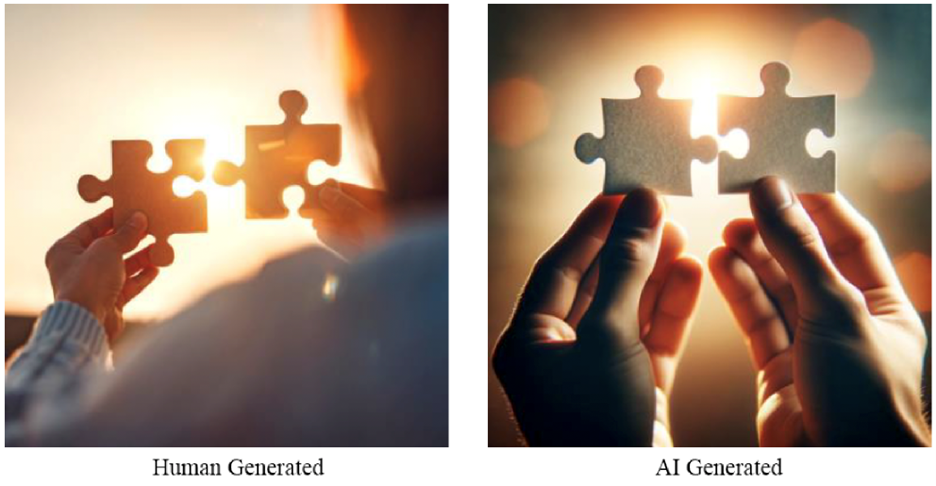

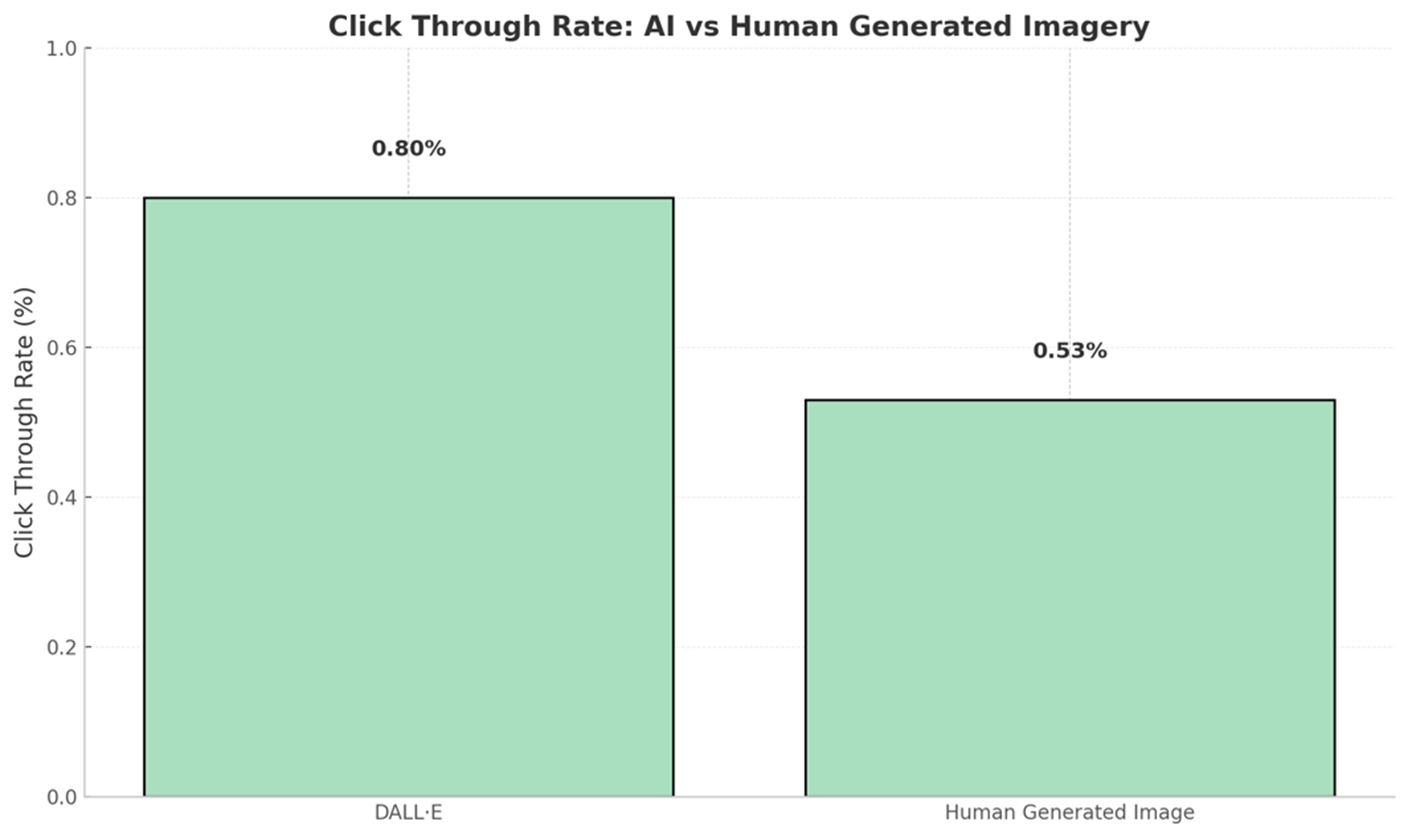

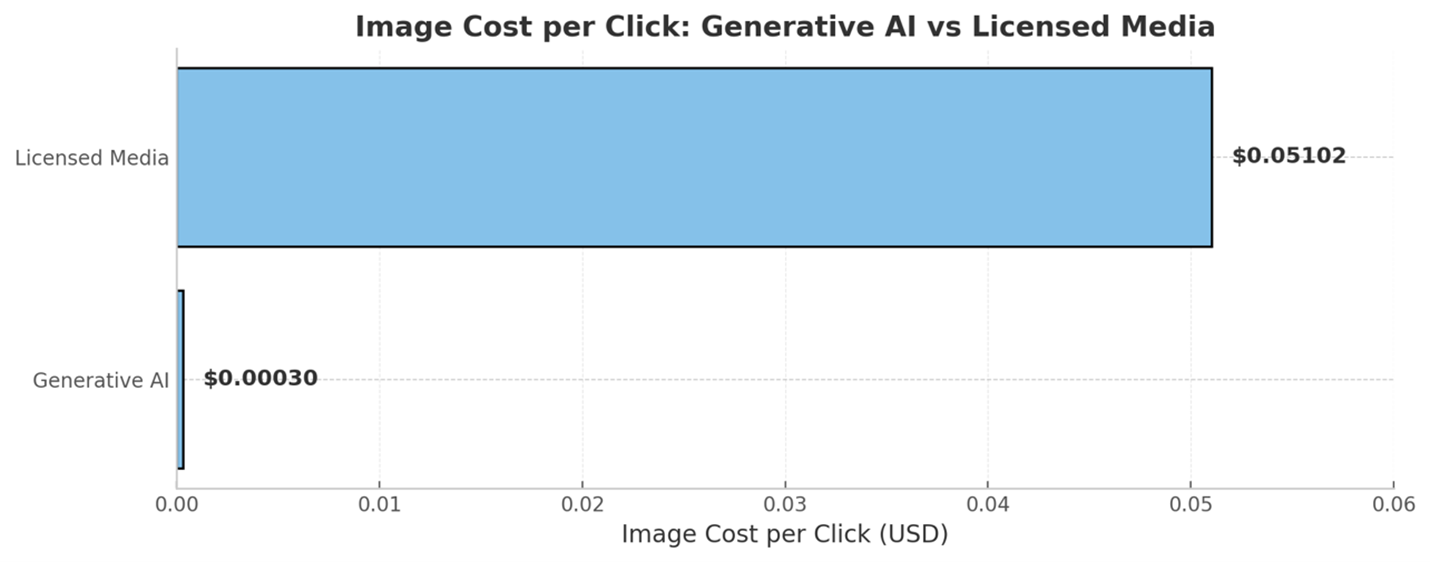

In fact, a recent academic study found that AI-generated imagery, when given the same creative brief as a human marketing professional, outperformed the human-selected visuals. What’s more, the AI-generated image cost was $.04, as compared to $5 for the stock photo, a 99.2% cost savings compared to licensed media.

Placement gets smarter, too. The AI tool recommends ad slots before mobile tutorial videos on YouTube, late-night search ads (when people are likely combing through their email receipts), and even sponsored posts in bill-organizing apps. You're still operating within the notice parameters the court approved, but you're using AI to enhance the effectiveness of your campaign.

And when the notice campaign performance data starts rolling in, you’re not just meeting the Rule 23 standards for reach and engagement—you’re exceeding them. You're reaching the class, and more importantly, the class is responding by filing claims.

Step 2: Supporting the Filing Process

Once on the court-approved case website for our theoretical case, potential class members would have questions: How do I know if I had Mobile Protect? Is my iPhone model eligible? What kind of documentation do I need, and where do I find it? And the vast majority of the time, these questions concern the same aspects of the settlement.

Here, an AI-powered Q&A tool reduces friction. It isn’t just a glorified FAQ. It’s trained on the specific terms of the Jane Doe v. Fictional Mobile Company settlement and can handle a range of real-world claimant scenarios. Someone might ask, “I canceled Fictional Mobile in 2021—am I still eligible?” or “Is a screenshot from my Fictional Mobile app enough to prove I had Mobile Protect?” The chatbot can interpret the question, apply the settlement logic, and give an answer that’s accurate, consistent, and in plain language.

Even better, AI Q&A tools operate 24/7, resolving 80–90% of initial inquiries on first contact, and freeing up human time to focus on helping claimants with more individualized issues.

On the technical side, the chatbot helps claimants troubleshoot common upload issues, like unsupported file formats or incomplete image captures from the FMC app. It can guide claimants through the process of retaking a screenshot or converting a file on mobile devices, tasks that could otherwise increase abandonment rates or jam up live support.

For those who need extra help, the AI knows when to escalate to a human agent, which preserves resources for more complex inquiries. And perhaps best of all, there’s no hold music, no dropped calls, and no “We are experiencing high call volumes. You are number 583 in the queue”.

According to MIT Technology Review, nearly 90% of businesses report improved time to resolution, and over 80 percent have noted enhanced call volume processing using AI. A shorter time to resolution is better for everyone.

Step 3: Fraud Detection

I can tell you who isn’t stuck in the helpdesk queue—fraudsters.

In any settlement offering cash relief, fraud is inevitable. But with the rise of bots and generative AI, fraud is no longer just inevitable—it’s industrialized.

A 2024 Reuters article discusses a recent case involving Grande Cosmetics: a $6 million settlement drew more than 6.5 million claims—yet only about 110,000 were deemed valid by the claims administrator and third-party fraud screening service.

This was not an anomaly. The same Reuters article highlights the scale of the problem, citing a report that found that fraudulent claims grew from just over 400,000 in 2021 to over 80 million in 2023. And fraud has consequences. Fraudulent claims that go undetected by administrators typically receive a payout, which in turn can dilute payouts for legitimate claimants—a serious problem for the fair administration of justice.

But you’re smart, and you came prepared to fight fire with fire. Your fraud prevention team scrubs the claims database using a proprietary, multi-layered fraud detection process, powered in part by AI. Each claim is assigned a risk score based on multiple factors, including:

- Spikes in claim volume from the same IP address, device, or browser fingerprint

- Near-duplicate documents with only slight variations

- Metadata inconsistencies and layout anomalies—hallmarks of synthetic document generation

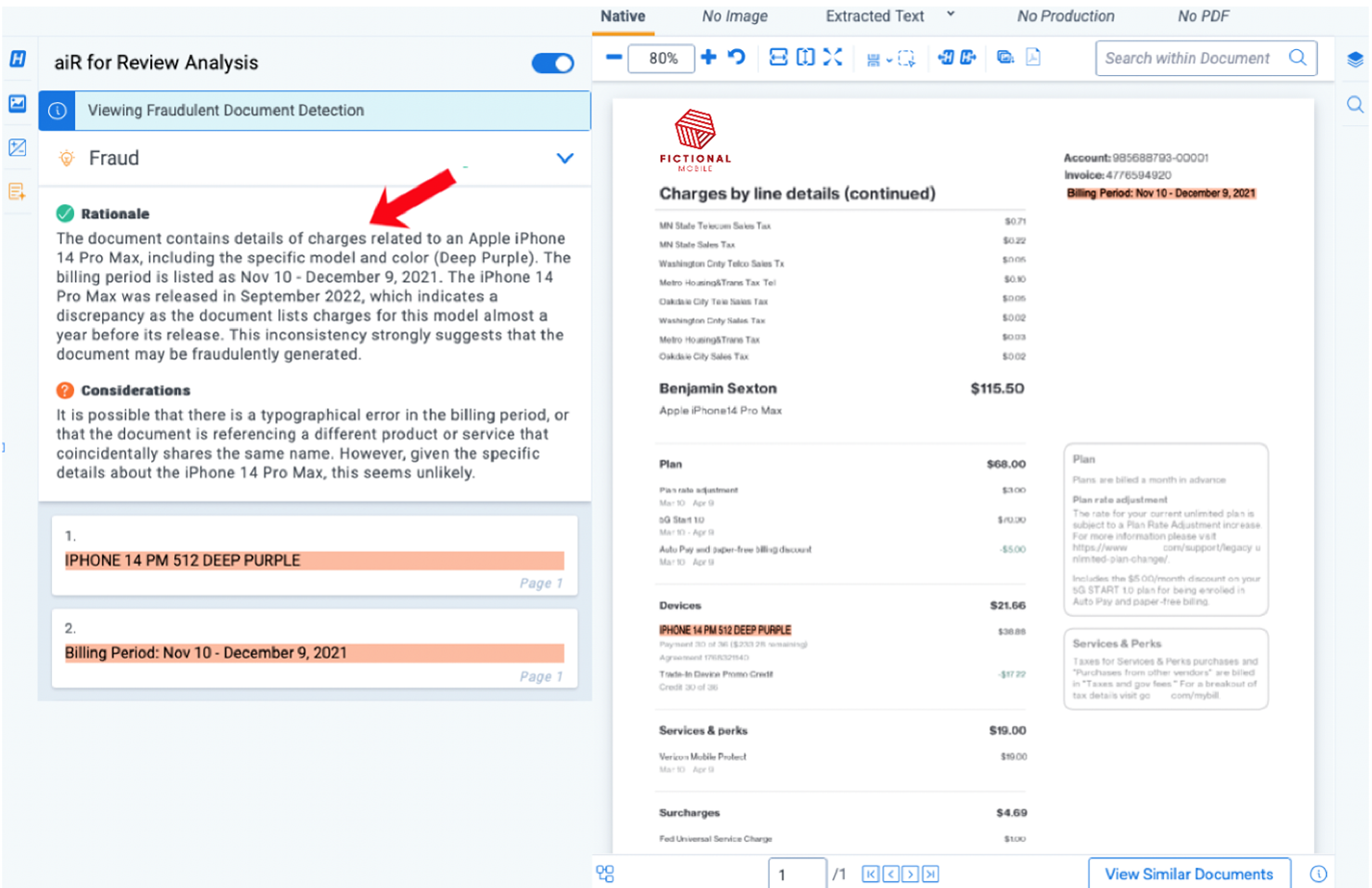

In our hypothetical case, the administrator sees waves of submissions that appear valid at first glance; however, closer inspection reveals hundreds, if not thousands, of nearly identical FMC bills. Fraudsters purchased lists of compromised names and addresses on the dark web and have used them to generate documents to substantiate their fraudulent claims.

These aren’t crude forgeries; they’re sophisticated fakes, sometimes indistinguishable from the real thing to the human eye. But there usually are subtle “tells” such as a mismatch in metadata, inconsistent formatting, or tiny, unusual artifacts that go unnoticed to the naked eye. In this example, a fraudulent phone bill would have gone undetected had it not been for AI noticing that the iPhone version hadn’t yet been released at the time the bill was sent out.

These aren’t patterns a human reviewer would likely catch, at least not consistently, and not at scale. AI flags them for secondary review before payments are issued, helping preserve the integrity of the fund and ensuring that relief goes to actual class members without slowing down the process.

Step 4: Document Review at Scale

After scrubbing the database for fraud, we need to review claimants’ documentation to determine their eligibility. Each claim must include an FMC bill or account summary showing both enrollment in Mobile Protect and ownership of a qualifying iPhone. Reviewing these documents manually—especially at volume—is slow, inconsistent, and costly.

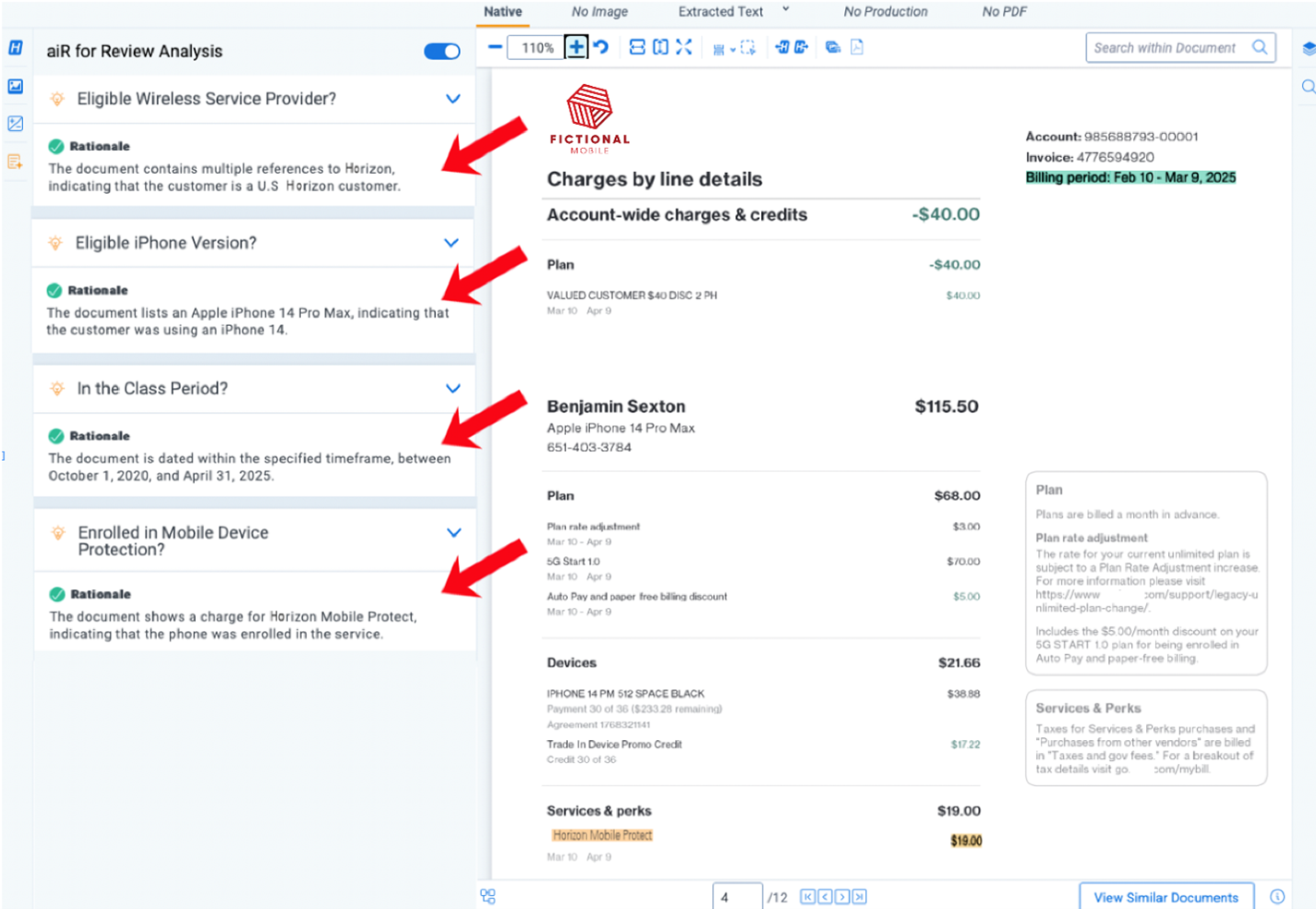

Here, you turn to generative AI to assist with review. These models aren’t just screening for keywords; they’re interpreting documents in context, much like a human reviewer would. The system is prompted with the settlement criteria and asked to assess each uploaded file: Does it include a Mobile Protect line item? Is the billing date within the class period? Does the device listed match an iPhone 12, 13, or 14?

For each document, the AI generates a short, structured rationale—for example: “Claimant was charged for Mobile Protect on April 2022 bill; device listed as iPhone 13; within eligibility window.” Claims are then categorized as clearly valid, clearly deficient, or requiring further review. Now imagine being able to run this analysis across a half-million documents per day.

This isn’t a human replacement; it’s a force multiplier[tk1] . Generative AI provides a faster, more consistent first pass across hundreds of thousands, or even millions, of claims, while maintaining transparency, as well as providing an audit trail and the ability to escalate anything that falls outside the bounds of clear eligibility. This allows the human reviewer team to focus their time on edge cases, providing more individualized services, and ensuring high quality results.

The Bigger Picture

In this hypothetical case, AI was not used to make legal decisions or determine eligibility. It was used to triage, assist, detect, and document—freeing up human reviewers to focus on nuanced tasks and exceptions. It helped support claimant access while mitigating fraud. It made the process more consistent, auditable, and scalable.

This is the emerging model for AI in settlement administration: not as a substitute for oversight, but as infrastructure that helps experienced teams meet modern challenges, especially when class sizes are large, documents are required, and fraud is widespread.

For legal professionals involved in class actions—whether on the plaintiff side, defense side, or from the bench—understanding these tools will be increasingly important. They are not theoretical and, in our opinion, no longer optional. AI is already part of how fairness is being administered.

Disclaimer: The hypothetical case study is fictional and used by the authors to illustrate the ways AI tools can help design and execute class action settlements. Any similarity to actual firms or actual court cases is purely coincidental.

Request a Proposal

If you are interested in any of our services, you can request a proposal by filling out the fields in this section.

CONTACT US

- Case Inquiry

- Media Contact

- 1-800-207-7160

- info@JNDLA.com

- www.JNDLA.com

- Careers@JNDLA.com

JOIN US